Agile Process Maturity Model

"The goal of the Agile Process Maturity Model (APMM) is to provide a framework which provides context for the plethora of agile methodologies and practices out there today. The APMM defines three levels, each of which build upon each other, for agile processes and practices."

APMM Level 1: Core Agile Development

Level 1 agile processes address a portion of the system life cycle (SDLC). Examples of Level 1 agile processes include Scrum, Extreme Programming, Agile Modeling and Agile Data.

Level 1 agile processes address a portion of the system life cycle (SDLC). Examples of Level 1 agile processes include Scrum, Extreme Programming, Agile Modeling and Agile Data.

APMM Level 2: Disciplined Agile Delivery

Level 2 agile processes extend Level 1 to address the full system delivery life cycle (SDLC). As the

criteria for disciplined agile development suggests they also tend to dial up certain aspects of agile development, such as testing, measurement, and process improvement. Furthermore, they include explicit mechanisms to support effective (ideally lean) governance. Examples of Level 2 agile processes include Hybrid Processes (Scrum@XP), Rational Unified Process, Open Unified Process, Harmony ESW and Dynamic System Development Method.

Level 2 agile processes extend Level 1 to address the full system delivery life cycle (SDLC). As the

criteria for disciplined agile development suggests they also tend to dial up certain aspects of agile development, such as testing, measurement, and process improvement. Furthermore, they include explicit mechanisms to support effective (ideally lean) governance. Examples of Level 2 agile processes include Hybrid Processes (Scrum@XP), Rational Unified Process, Open Unified Process, Harmony ESW and Dynamic System Development Method.

APMM Level 3: Agility at Scale

In the early days of agile, the applications where agile development was applied were smaller in scope and relatively straightforward. Today, the picture has changed significantly and organizations want to apply agile development to a broader set of projects. Agile hence needs to adapt to deal with the many business, organization, and technical complexities todays software development organizations are facing. This is what Level 3 of the APMM is all about explicitly addressing the complexities which disciplined agile delivery teams face in the real world.

In the early days of agile, the applications where agile development was applied were smaller in scope and relatively straightforward. Today, the picture has changed significantly and organizations want to apply agile development to a broader set of projects. Agile hence needs to adapt to deal with the many business, organization, and technical complexities todays software development organizations are facing. This is what Level 3 of the APMM is all about explicitly addressing the complexities which disciplined agile delivery teams face in the real world.

The goal of the APMM is "to define a framework which can be used to put the myriad agile processes into context.

Agile Maturity Model

The AMM, "tries to quantify degree of responsiveness of a team to an organization's business needs. The philosophy being that increasing the discipline and proficiency of executing some best practices will increase the degree of responsiveness."

The AMM primarily focuses on application development activities; the dimensions of the AMM are

- Testing

- Source code management

- Collective code ownership

- Collaboration

- Responsiveness to business

- Assurance and governance

- Story formation

- Design simplicity

- Build process

For each of the above dimensions, the AMM lists behavioral patterns exhibited by teams. Teams can use the AMM as a reference to identify and compare the behavior that they currently exhibit and plan to alter and tailor them.

Testing

- Acceptance testing in lieu of all other tests

- No unit testing framework, ad hoc testing (Manual testing of individual components/ controls)

- Unit testing, manual functional testing; application has a testable architecture

- Unit testing integrated into build process, testing automated as much as reasonable given application

- Developers write unit tests before writing functional code

- Test are identified and produced as part of a story creation

- Automated functional testing (e.g.. GUI testing); stories remain in development until all bugs are fixed or deferred

- Traditional schemes based on fire-locking and time-sharing

- SCM supports version of code

- IDE(s) integrate with SCM; developers include meaningful comments in commits

- SCM support merging; optimistic check-ins

- SCM support atomic commits

- All development collateral is in SCM

- SCM is transparent to delivery team, behind build process

- Knowledge held by specific team members; people work in isolation

- No pairing, people work alone, some informal process for keeping people informed

- Pairing; no code locking

- Pairing scheme ensures rotation

- Team signs up for functionality rather than assignment to individuals

- Within an sprint, functionality delivery is signed up for just in time

- Old (bugs) and new functionality is queued, development team pops the stack in real time

- Regular progress updates from management to the delivery team (as opposed to the other way around); irregular team meetings

- Project collaboration tools (wiki, mailing list, IM) in place and used throughout project team; project status published and visible to all

- Daily stand-ups and sprint meetings; problem solving is bottom-up as opposed to top-down; team sets sprint objectives and agrees on estimates.

- Integrated, continuous build process, with build status notification to the team and collective responsibility for state of the build

- Business is part of the team, stakeholders accept working software at reviews in lieu of other tracking or progress metrics

- Frequent (near-real time) prioritization of old and new functionality

- Build automatically deploys to QA environment available to any interested party

- Frozen specification, unresponsive to business value

- Team tries to accommodate new requirements by ad hoc change requests ("pile it on")

- Iterative process with sprints of length short enough to respond to business change

- Showcases per sprint; business prioritizes functionality per sprint

- Continuous involvement of the business on the team: business certifies story complete out of dev; involved in (rather than approval of) story definition and acceptance tests

- Business writes high-level stories for all requests; organizational story queue exists; prioritization decisions made from full story backlog

- Development process is integral to business initiatives; development teams work as a part of the business unit rather than as a service to the business unit

- Status reports document progress; schedule acts as plan

- Concept of release planning is introduced

- Sprint planning is introduced

- Plan becomes communication and tracking tool rather than an exception report

- Every sprint review examines value delivered and assesses next sprint by need, priorities (and delivery)

- Organizational adoption: Introduction of Portfolio Planning and global optimization of resources (use of metric and standard measures)

- Business review of value and return helps teams on regular basis based upon status

- Development tasks extracted from voluminous, frozen requirements artifacts

- Production of lightweight artifacts (e.g. panel flows) to drive high-level requirements development

- Mapping granular requirements (e.g., use cases) in high level user requirements

- Marshaling use cases into discreet statements of functionality that can be delivered in time boxed development sprints

- Stories are an expression of end-to-end functionality to be developed, including testable acceptance criteria and a statements of value

- Spontaneous story development provided by business for in-flight projects; stories not derived from extant sources but are immediate expressions of customer demand/need

- Global repository of functional requirements for stories developed by business for any business requirements, including a formal measure of business value delivered

- Big up-front design that attempts to accommodate all potential future needs

- Application of fundamental design patterns

- Structural refactoring to decouple application architecture

- Doing only what needs to be done

- Aggressive and constant refactoring to improve code quality/simplicity extant code

- Spiking solution with each release to introduce new ideas and challenge architectural decision

- Technical design decisions taken with each story

- Ad-hoc build requests; scripting and component marshalling performed manually

- Consistent, repeatable build process with durable artifacts executed manually with each release

- Build is automated, executed on a timed basis

- Unit tests are integrated with the automated build; team is constantly notified of the status of the build; build triggered by SCM updates

- Test and metrics (e.g., code quality, complexity, check style, etc.) are integrated as gatekeeper events; build data archived and reported in build portal/dashboard

- All product components advertise dependencies; master repository established

- Product integration tests included; build process executes automatic deployment to QA testing environment

This approach ressembles in a way to how CMMI is expressed as a model. It lists dimensions (key process areas) and then different maturity levels for each one. The model's scope is very narrow and well expressed (IMO) and it could be useful for an organization to define how responsive in agile terms is the development department to the business.

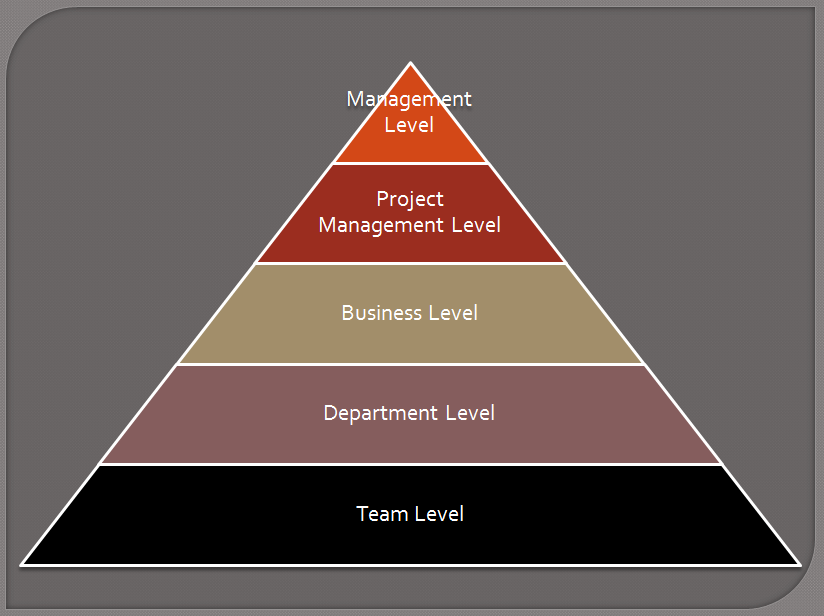

The 5 levels of Maturity

Martin Proulx sketch this agile maturity model:

Level 1 – Team Level Maturity

At this level, team members have decided to adopt Scrum and/or software engineering practices without asking for approval from their manager. Some of the well known practices are used but without consistency.

Level 2 – Department Level Maturity

At this level, the practices adopted by the team members have started to be imitated by other teams within the software development department. Some of the managers have noticed the positive results of adopting the Agile approach and are tempted to replicate what they observed.

Level 3 – Business Level Maturity

At this level, the solution teams have integrated the business people in the model. Collaboration (and trust) has increased and a partnership relationship is increasing.

Level 4 – Project Management Level Maturity

At this level, the project management approach is modified to include some of the Scrum practices. Although the department still mostly relies on the traditional PMBOK recommendations, Scrum has been integrated in the project management approach.

Level 5 – Management Level Maturity

At this level, managers have adapted their management style to support an Agile organization. Organizational structures and reporting mechanisms are better adapted for collaboration and improved for increased performance.

This model measures basically how spread are the Agile values throughout the organization, starting from the team level with certain practices until reaching the maximun level, where the whole organization shares the same values and behaviors.

How to measure Agile maturity then?

It seems there are 2 dimensions to be included when measuring the Agile maturity:

- How mature are the Agile practices and process: this is basically the 2nd approach listed above and applies mainly at the project level (or team level using the 3rd model above). How mature are you to do testing? You do all testing manually (or not do it at all!) or you have a solid testing strategy that includes a great deal of automation. How mature are you programming? How mature are you managing your requirements? In all these dimensions, it can be defined an agile way of doing things. The 2nd approach uses behavioral patterns. Another option would be to use objectives and key practices.

- How spread are these Agile practices and processes throughout the organization: Basically this point says that a mature Agile organization does business in an Agile way. It is fast to deliver business value, it adapts, there is a good level of communication and collaboration throughout the company, etc. A model that encompasses this dimension is much mode ambitious. But probably it is the only way of making Agile work in an organization. The way the software is constructed needs to fit with the way the organization does business around it. The structure of the organization should enable and encourage the right connections that make communication smart and effective throughout the organization. The organization needs to have also the structure to learn and maintain this Agile culture.

CMMI follows a similar approach as it starts with the practices dimensions (level 2) and follows towards a standarized process in the organization (level 3). This seems the natural projection to follow when seeking maturity. Starting with mastering Agility at the team level and then managing the whole organization with the same values and principles.

For both dimensions, a means of measuring maturity is needed. A first attempt using the Shu-Ha-Ri scale will be used.

A very inmature Agile organization would then be an organization where the practices are in the SHU-SHU level at the practices dimension and organization dimension. A RI-RI organization is one that has mastered the Agile practices at the team level and that manages its whole business with Agility.

Agility especially with all the projects that you normally target is more like a way of knowing the basic need , agile business is what that helps us the most and this is the way we can get to learn a lot and in the same way make things better.

ReplyDelete